The Technical SEO Essentials Checklist (for Not-So-Technical Folks)

This is a checklist of technical SEO basics that you might not have known were basics. We know some of our readers are new to technical SEO, so we wanted to arm you with (what we believe is) the essential technical audit list of 2016. Focusing on becoming a full-stack SEO? This is the resource for you. Whether you’re a developer wunderkind or two steps away from luddite, this checklist is a great SEO go-to guide that anyone can run through.

One of the cornerstones of SEO is having a fast, robust and technically sound website. Nothing can sink a great campaign quite like hidden tech issues, and finding them on the fly is never fun. So, we’ve created a checklist to help you knock those issues down:

Click here to open the checklist as a Google Doc!

In addition to the SEO checklist, we’ve also listed out the free tools you need to audit each section. We’re going to focus on the technical, so we won’t cover things like broken internal links and images here.

1. Robots.txt isn’t blocking important parts of your site

Robots.txt is a file on your site that tells web robots (such as Google’s crawler) where and how to crawl your site. They visit this file everytime they visit your site. It’s pretty easy to accidentally block important things, especially if you’ve had developers working or have just pushed a site from development to live. We see it all the time. Robots.txt has to be pretty much perfectly implemented.

You can find your robots.txt in the root folder, ie. www.yoursite.com/robots.txt

Make sure your robots meet the following criteria. If in doubt, take down robots.txt entirely! It’s not required, and it’s far better to have Google crawl your site too much rather than not at all.

Robots.txt isn’t blocking your whole site

- Make sure your robots.txt file does not contain the following two lines:

User-agent: * Disallow: /

This happens all the time when developers push updates to the site. They work in a staging environment and restrict search engines from discovering their unfinished work. If you see this in your robots file, you’re blocking the whole site to all crawlers. Also, check that you don’t see something very similar to the above, but with anything Google or Googlebot in place of the *.

The asterisk represents every bot (or user-agent) in existence, and the forward slash represents the trailing slash next to your top-level-domain. In other words, the slash means “everything”.

Your scripts and CSS directories aren’t blocked

- Crawlers need to see your scripts (which often cause dynamic effects on your page) and CSS files (which add the formatting, styling, and colors). Today’s Google looks at the style and format of your page as ranking factors, instead of simply looking at the text. You’re looking for things like Disallow: /css/ or Disallow: /js/ in your file.

User-agent: * Disallow: /css/

It used to be the case that websites needed to block these, but things have flipped completely around in the last few years. Following SEO and webmaster best practices a few years ago, you might be blinding Google from fully rendering your pages (and some of the additional benefits that pertain).

2. Your sitemaps are working and submitted

- Tools required:

- Google Sitemap Testing Tool

- Google Search Console

- Bing Webmaster Tools

- Screaming Frog 6.0 or higher (free or paid) version

Your sitemap file or files should be available, formatted correctly, not blocked in robots, and submitted to search engines. This file helps Google “bulk recieve” all the important pages. If everything checks out, Google should be able to index all your important URLs within a few days. A few common issues to check:

Sitemaps are in XML format

- Test this with Google’s Sitemap Testing Tool.

Sitemap indexes are in the right format

- If you’ve broken your sitemap into smaller child sitemaps, you should have a sitemap index and it needs to be in the right format.

Sitemap doesn’t have broken or unnecessary links

- Broken links in your sitemap send a bad message to search engines. Make sure all of the URLs in your sitemap work before you submit it to Google. Screaming Frog has a feature that does just this. Enter your sitemap URL, and it will crawl for broken links (and other issues). Check out number three on this page here to see how it works.

- You only want to include pages that resolve (200 server headers), pages that are the canonical page (representational page), and are important for search engines to rank.

Sitemaps are submitted to Google Search Console and Bing Webmaster Tools

- Use the above links to make sure your sitemaps are submitted! If search engines can’t find you, they can’t index you. Submitting your sitemap puts your content on their radar.

To really nail down how to create the perfect XML sitemap, check out Bill Sebald’s excellent post.

3. Your HTML isn’t broken

- Tools required:

[su_newsletter_email]

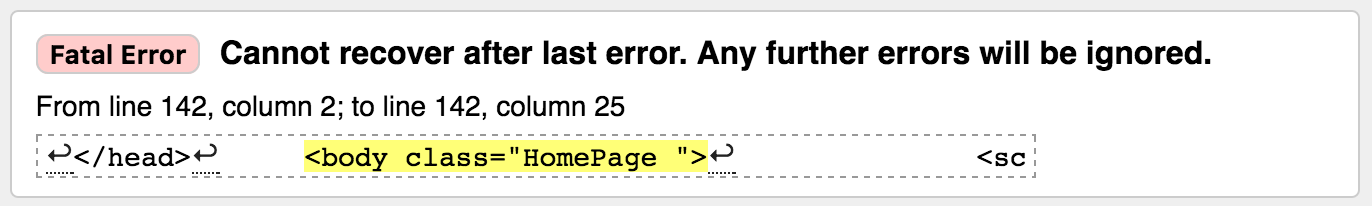

Broken HTML can happen to anyone. If multiple people are working on a site, a dev team, or even just you in your pajamas, it’s pretty easy to forget to close a tag. Browsers are pretty good at figuring out the “quirks”, but it’s always better to make sure you HTML is proper for Google. Use the W3C validator tool to check a few important pages on your site. A few things you’re looking for in particular:

No unclosed tags

It’s easy to forget to close an element like <div> or <span>, and the validator helps you find it.

No code between the </head> and <body> tags on your site

You can’t have any code between the head and body of your site, but it happens all the time.

Missing doctype

- You must declare your page’s doctype.

Missing language declarations

- You should declare a language so search engines index you for the right language searches

Validator makes it through your whole page

- If the validator says “Fatal Error” and “Cannot recover after the last error” partway through your page, then something is so wrong with your page that the tool can’t even continue testing.

Generally, it will show you where the major fail occurred, and you should take that info straight to your developer.

Generally, it will show you where the major fail occurred, and you should take that info straight to your developer.

4. Your site isn’t unnecessarily slow

- Tools required:

- Google Page Speed Insights

- GT Metrix

- Image editors such as Photoshop, Gimp (free) or Compression.io

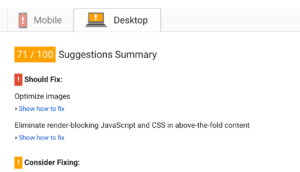

Page speed is an important Google ranking factor o n both desktop and mobile. It’s so important, in fact, that Google has built a whole tool to help you find and fix issues. The excellent free GT Metrix picks up where Google leaves off with in-depth suggestions and instructions.

n both desktop and mobile. It’s so important, in fact, that Google has built a whole tool to help you find and fix issues. The excellent free GT Metrix picks up where Google leaves off with in-depth suggestions and instructions.

Google will even provide downloads of the compressed items for your site at the bottom of the Page Speed Insights tool!

A few must-check items:

Images are optimized with lossless compression

- You should be using lossless compression to make your image file sizes as small as possible without sacrificing quality. It’s so important to Google that Page Speed Insights literally compresses your images and shows you exactly how much space you’ll save.

Minimize render-blocking Javascript

- Render blocking scripts can slow a whole page down. Often, when a browser hits a script, it has to stop loading the page and load the script, slowing everything down. You want to make sure “above the fold” content is generally loaded before any big scripts. The simplest solution often involves moving as much Javascript lower in your page as much as possible. In the footer is great.

Scripts, images and other resources load quickly

- If your stuff loads slow, consider using a CDN (content delivery network). It’s so important to Google that they even provide a lot of commonly-used scripts and stylesheets on their own free-to-use CDN!

Conclusion

If some of these things don’t quite make sense, Google Developers for Webmasters is a great resource to learn more about managing your site. While Google has gotten better in recent years, they still need some help with crawlability.

Don’t post-mortem lost sales after a campaign – batten down and button up beforehand, and don’t miss a beat.